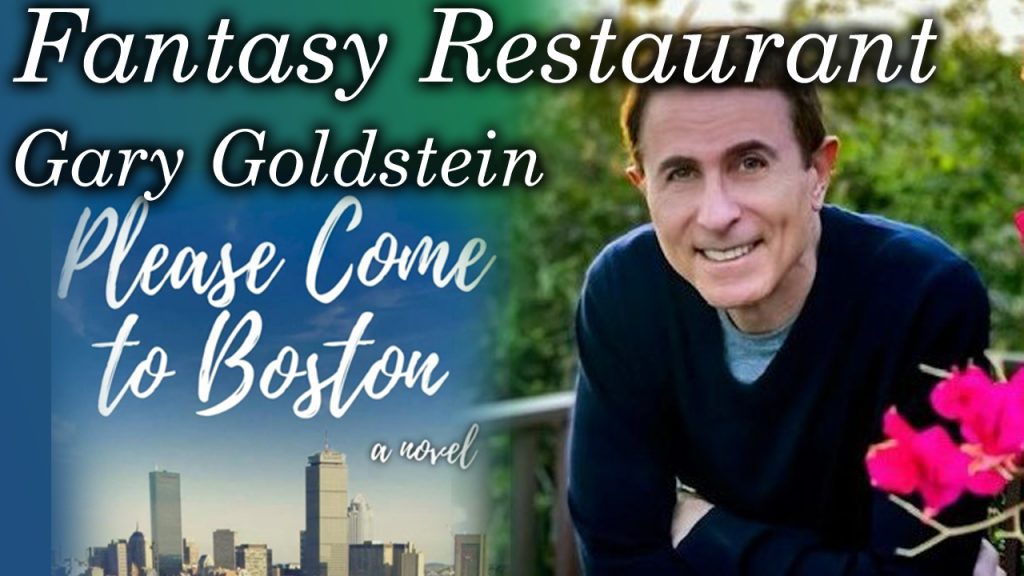

Welcome to the warmup exercise for the Why Am I podcast called “the Fantasy Restaurant.” In here, my guests get to pick their favorite: drink, appetizer, main, sides, and dessert…anything goes. Gary puts together a meal that seems to incorporate a lot of the foods found near his NY home…and Asia, and Greece, and Italy LOL. Though it varies a lot, it all sounds delicious. I hope you enjoy this meal with Gary.

Help us grow by sharing with someone!

Please show them some love on their socials here: https://twitter.com/GaryGoldsteinLA, https://www.instagram.com/garygoldsteinla/, https://www.garygoldsteinla.com/book-please-come-to-boston.

If you want to support the podcast you can do so via https://www.patreon.com/whyamipod (this gives you access to bonus content including their Fantasy Restaurant!)

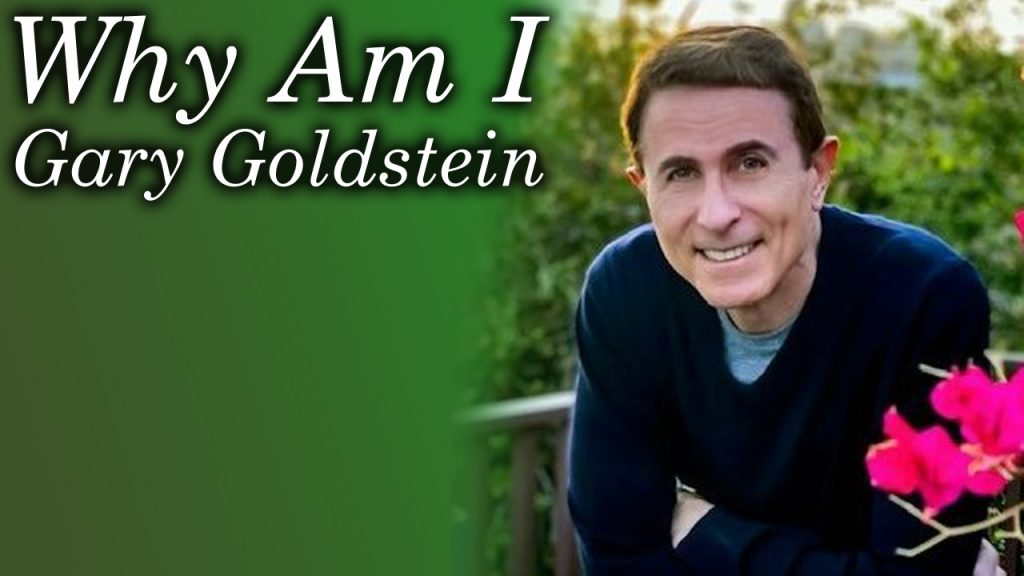

Hey everybody, I’m Greg Sowell and this is Why Am I, a podcast where I talk to interesting people and try to trace a path to where they find themselves today. My guest this go around is Gary Goldstein. This fella is a writer of books, TV shows, and of movies. Not only has he written for Hallmark movies, which is becoming something of a theme here), but he also wrote for the likes of Beverly Hills 90210, which featured prominently in my younger years. If you find this walk through his mind interesting, I urge you to check out his newest book Please Come To Boston. At any rate, I hope you enjoy this chat with Gary.

Help us grow by sharing with someone!

Please show them some love on their socials here: https://twitter.com/GaryGoldsteinLA, https://www.instagram.com/garygoldsteinla/, https://www.garygoldsteinla.com/book-please-come-to-boston.

If you want to support the podcast you can do so via https://www.patreon.com/whyamipod (this gives you access to bonus content including their Fantasy Restaurant!)

Once we choose a system, we often feel married to it. The idea of migrating our infrastructure often seems more expensive than the new price the vendor just handed us for what we are using. I’ve felt/seen this with software, cloud providers, and hypervisors. While this can be the truth at first glance, automation can often level the playing field. Case and point, I’m about to demonstrate how to migrate migrate a Rocky VM from VMWare to Proxmox VE completely via automation 🙂

Video Demo

Overview

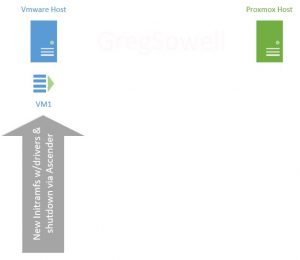

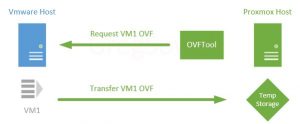

I thought I might quickly begin by describing the process with graphics.

First, we have a VMWare cluster and our Proxmox cluster. As you can see I have a virtual machine(VM1) that I want to migrate from VMware over to Proxmox.

My first step is to use automation to connect into the running VM and prep it for migration. My Rocky host didn’t have the Virtio drivers in the initramfs, so I need to add them. The initramfs is a file system that boots into ram that allows the kernel to mount the disks and do the actual file system boot. Your mileage may vary…perhaps these are already in your boot system, so give it a test as is and see how your system responds. Once the prep work is done, the VM is shutdown.

Next we install VMware’s OVFTool somewhere to extract the VMs as OVFs from the VMware system. For my small demo I’m going to install it on my Proxmox host and do the migrations directly from there. You will do the migrations to a temporary storage location either directly on the Proxmox host or via something like an NFS share mapped to the host. If you are doing this to an NFS share you could easily be running the OVFTool from a completely separate server(this would cause the least impact to your Proxmox host).

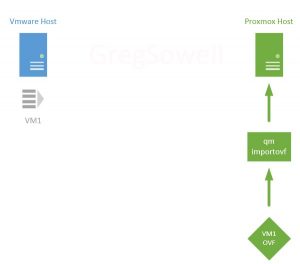

Now on the Proxmox host I’ll use the qm importovf command to bring the newly created VM1 OVF into the system as a VM.

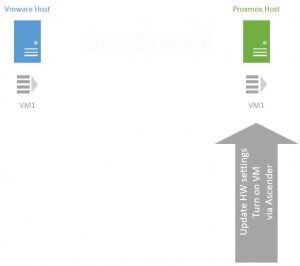

Before starting the VM we’ll need to modify any hardware settings required for this specific VM. Again, this is a “your mileage may vary” scenario. In my case, I had to update the bios, add an efi disk for the new bios, modify the CPU type, and reconfigure the networking.

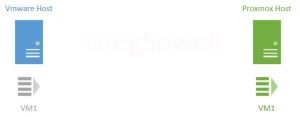

Now the last step is pretty simple; all that needs to be done is to start the new VM.

Playbooks

All of my playbooks can be found here in my git repository.

The whole process actually took a good bit of work to figure out(really down to driver/bios issues), so hopefully I can save you some time and effort.

preconfigure-hosts.yml

This playbook’s purpose is to do any preconfiguration work necessary. In my case the prework is to update the initramfs with virtio drivers and then to shut the machine down(OVFTool needs the VM to be shutdown before it can migrate it). Depending on your environment and OS, there may be different things required. Here you could also do things like backup network configuration settings so that when the migration is complete you can apply them.

1 2 3 4 5 6 7 8 9 10 11 12 | ---

- name: Preconfigure the VMWare hosts for migration

hosts: all

gather_facts: false

vars:

tasks:

- name: Connect to hosts and add virtio drivers to boot(using virtio in proxmox)

ansible.builtin.shell: 'dracut --add-drivers "virtio_scsi virtio_pci" -f -v /boot/initramfs-`uname -r`.img `uname -r`'

register: driver_install

- name: Shutdown the host

community.general.shutdown: |

In this playbook I create the new boot file based on the name of the kernel currently running( the uname -r portion).

migrate-vms.yml

Next the migrate playbooks is run. This is what actually grabs the OVF, imports into Proxmox, and updates all settings.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 | ---

- name: Use ovftool to pull vms from vmware and import into proxmox

hosts: all

gather_facts: false

vars:

proxmox_auth: &proxmox_auth

api_host: proxmox.gregsowell.com

api_user: "{{ gen2_user }}"

# api_user: root@pam # username format example

# Use standard password

api_password: "{{ gen2_pword }}"

# Use api token and secret - example format

# api_token_id: gregisa5.0

# api_token_secret: 72a72987-ff68-44f1-9fee-c09adaaecf4d

#Full path to ovftool directory

ovftool_path: /root/ovftool

#Hostname or IP of vmware vcenter

vmware_host: 10.0.2.10

#Hostname or IP of proxmox

proxmox_host: proxmox.gregsowell.com

#Datacenter the VMs are in

vmware_datacenter: MNS

#Directory path to vms

vmware_directory: Greg/ciq

#VMware username

# vmware_username: administrator

vmware_username: "{{ gen1_user }}"

#VMware password

# vmware_password: test

vmware_password: "{{ gen1_pword }}"

#Proxmox migration folder

proxmox_migration_dir: /root/migration

#Storage proxmox will import new vms into with qm import command

proxmox_storage: local-lvm

tasks:

- name: Add proxmox host to inventory

ansible.builtin.add_host:

name: "{{ proxmox_host }}"

ansible_host: "{{ proxmox_host }}"

run_once: true

- name: Create the proxmox migration directory if it doesn't exist

ansible.builtin.file:

path: "{{ proxmox_migration_dir }}"

state: directory

mode: '0755'

delegate_to: "{{ proxmox_host }}"

run_once: true

- name: Check for each host and see if they already have an export folder created

stat:

path: "{{ proxmox_migration_dir }}/{{ hostvars[inventory_hostname].config.name }}"

register: directory_status

delegate_to: "{{ proxmox_host }}"

- name: Block for exporting/importing hosts

when: inventory_hostname != proxmox_host and ( not directory_status.stat.exists or not directory_status.stat.isdir )

block:

- name: Run ovftool to export vm from vmware to proxmox server if folder isn't already there

ansible.builtin.shell: "{{ ovftool_path }}/ovftool --noSSLVerify vi://{{ vmware_username }}:{{ vmware_password }}@{{ vmware_host }}:443/{{ vmware_datacenter }}/vm/{{ vmware_directory }}/{{ hostvars[inventory_hostname].config.name }} ."

args:

chdir: "{{ proxmox_migration_dir }}/"

delegate_to: "{{ proxmox_host }}"

no_log: true

- name: Call task file to provision new proxmox vm. Loop over hosts in play

ansible.builtin.include_tasks:

file: import-ovf.yml

run_once: true

loop: "{{ play_hosts }}"

- name: Modify proxmox vms to have required settings

community.general.proxmox_kvm:

<<: *proxmox_auth

name: "{{ hostvars[inventory_hostname].config.name }}"

node: proxmox

scsihw: virtio-scsi-pci

cpu: x86-64-v2-AES

bios: ovmf

net:

net0: 'virtio,bridge=vmbr0,firewall=1'

efidisk0:

storage: "{{ proxmox_storage }}"

format: raw

efitype: 4m

pre_enrolled_keys: false

update: true

update_unsafe: true

register: vm_update

delegate_to: localhost

- name: Start VM

community.general.proxmox_kvm:

<<: *proxmox_auth

name: "{{ hostvars[inventory_hostname].config.name }}"

node: proxmox

state: started

delegate_to: localhost

# ignore_errors: true |

I’ll attempt to cover the highlights instead of the entire playbook(which I do cover in the video).

Task number 2(Check for each host and see if they already have an export folder created) checks to see if the directory for the current host has already been migrated. This is used in the migration conditional later, so that if it already exists it won’t be exported/imported again.

Once the OVF has been created the (Call task file to provision new proxmox vm. Loop over hosts in play) task is run. This task runs once as a loop over a variable that contains all of the hosts in the current play(play_hosts which is a magic variable). For each host it will locate the next available Proxmox vid and then it will import the OVF.

import-ovf.yml

1 2 3 4 5 6 7 8 9 10 11 12 | ---

- name: Find the next available vmid

ansible.builtin.shell: pvesh get /cluster/nextid

register: next_vmid

delegate_to: "{{ proxmox_host }}"

- name: Run qm import with the new next_vmid

when: item != proxmox_host

ansible.builtin.shell: "qm importovf {{ next_vmid.stdout }} {{ hostvars[item].config.name }}/{{ hostvars[item].config.name }}.ovf {{ proxmox_storage }}"

args:

chdir: "{{ proxmox_migration_dir }}/"

delegate_to: "{{ proxmox_host }}" |

Once all OVFs have been imported we need to modify their hardware settings via this task(Modify proxmox vms to have required settings).

You can see with the proxmox_kvm module I’m using the update: true option. This works for most of the options I’m trying to modify, but it DOESN’T allow me to update my network settings or create an EFI disk, which is required for proper operation. To fix this you have to use the update_unsafe: true option. They have this because in the update I could break configs that result in data loss, but in this case I’m only adding required components on my new install, so I’m good to go.

Manual Testing

While the automation is awesome, you will likely still want/need to do some manual testing. I’ve got the various steps here in brief so you can do just that.

Install OVFTool

Visit this VMware page and grab whichever version of OVFTool is appropriate for you. I grabbed the 4.4.3 archive. I extracted it with tar and made the ovftool and ovftool.bin files executable. I put this on my Proxmox host as I was running all the commands from there.

VM Prep

On the VMs you intend to migrate, you can run the following command to see if you have the virtio drivers on your machine:

1 | find /lib/modules/`uname -r` -type f -name virtio_* |

If they are present(which has always been the case for me), you can run the manual steps to create the new initramfs file

1 | dracut --add-drivers "virtio_scsi virtio_pci" -f -v /boot/initramfs-`uname -r`.img `uname -r` |

Browse For VMs With OVFTool

There’s a specific folder structure your VMs will follow. To browse around and make sure things are where you expect them to be, you can use the following command:

1 | ovftool --noSSLVerify vi://username:password@host:443/YourDatacenter/vm |

My environment doesn’t have a valid cert on the vcenter, so I’m using the noSSLVerify option.

You can also leave off :password in the command and it will prompt you for your password.

YourDatacenter will be the case sensitive datacenter name and /vm will always need to be there(it’s where VMs show up).

This will begin to start allowing you to browse to your VM paths.

Export OVFs

The command to actually export the OVFs should be executed from inside of the folder you want things saved to:

1 | ovftool --noSSLVerify vi://[email protected]:443/MNS/vm/Greg/ciq/Greg-rocky8 . |

Notice in the command I followed the folder structure Greg/ciq, then added the name of my VM.

At the very end of the command is a space and a period. If you leave off the period it won’t do the export, so make sure “ .” is on the end.

Import OVFs

The command to import an OVF is as follows:

1 | qm importovf <vid> <contained folder>/<name of vm>.ovf <proxmox storage location> |

An example would be:

1 | qm importovf 400 Greg-rocky8-to-9-conversion/Greg-rocky8-to-9-conversion.ovf local-lvm |

Notice you have to specify a unique vid for each imported VM. You can look at the Proxmox interface to find the next one, or you can issue this CLI command and it will tell you what it is:

1 | pvesh get /cluster/nextid |

Conclusion

Migrating from one product to another can feel impossible at times, but automation is here to be your secret super power. We are quickly becoming migration experts, and we’d love to assist your team with complete migrations that have automation leave behinds…or we can also just do validation; as much or as little as you require.

As always, I appreciate you reading through, and would love your feedback.

Thanks and happy migrating!

Automating VMware Alternatives With Ansible And Ascender

I have personally used and had good success with VMware for nearly two decades. While it is a good product, I occasionally have folks talk about some alternatives, and how viable they are. For this article/demo I’m going to use Proxmox VE, which is a competent/user friendly hypervisor. Really, I’m going to examine this question from an automation perspective.

First, and most importantly, the user experience is exactly the same no matter what hypervisor you use. This means your users can smoothly transition from one platform to the other with no knowledge that anything has changed.

The backend, using Ansible and Ascender, will have different playbooks, which means you will be using different modules, and often some slightly different procedures, but frequently they aren’t so different.

Demo Video

Interfaces Compared

VMware’s Vcenter has been a pretty consistent interface for a while now:

Proxmox VE may be new to you, but the interface should look and feel awfully familiar:

They both will very similarly allow you to access and edit settings, add/delete VMs and templates…BUT, who wants to manage infrastructure manually?

Playbooks Compared

You can find my Proxmox playbooks here, and my VMware playbooks are here.

The two playbooks in question, one for Proxmox and one for VMware, are structured very similarly, but use their respective modules.

I’m going to breakdown and comment on each playbook below.

Proxmox Playbook(proxmox-vm.yml)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | ---

- name: Create and control VMs on Proxmox using Ascender

hosts: localhost

gather_facts: false

vars:

# Configure the api connection info for Proxmox host

proxmox_auth: &proxmox_auth

api_host: proxmox.gregsowell.com

api_user: "{{ gen1_user }}"

# api_user: root@pam # username format example

# Use standard password

api_password: "{{ gen1_pword }}"

# Use api token and secret - example format

# api_token_id: gregisa5.0

# api_token_secret: 72a72987-ff68-44f1-9fee-c09adaaecf4d |

Above I use a concept in YAML known as an anchor. In every task below I will reference this anchor with an alias. This essentially allows me to reference a set of options and inject them in various other places in my playbook. An anchor is set via the & symbol, which says “everything else after this is part of the anchor.”

Here I’m setting up the authentication. I have examples of doing username / password or using an api token. Notice that for username and password I’m using variables. This is because I’m passing in a custom credential via Ascender to the playbook at run time. Doing this allows me to securely maintain those credentials in the Ascender database.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | # Configure template to clone for new vm

clone_template: rocky9-template

# Configure location on Host to store new vm

storage_location: local-lvm

# Linked Clone needs format=unspecified and full=false

# Format of new VM

vm_format: qcow2

# Default name to use for the VM

vm_name: test-rocky9

# How many cores

# vm_cores: 2

# How many vcpus:

# vm_vcpus: 2

# Options for specifying network interface type

#vm_net0: 'virtio,bridge=vmbr0'

#vm_ipconfig0: 'ip=192.168.1.1/24,gw=192.168.1.1' |

Above here you can see that I’m setting up defaults for my standard variables that can be overridden at run time. Remember that if you pass in variables as extra_vars they have the highest level of precedence.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | # Switches for playbook control

# vm_action: provision

# vm_action: start

# vm_action: stop

# vm_action: delete

tasks:

- name: Block for creating a vm

when: vm_action == "provision"

block:

- name: Create a VM based on a template

community.general.proxmox_kvm:

# api_user: root@pam

# api_password: secret

# api_host: helldorado

<<: *proxmox_auth

clone: "{{ clone_template }}"

name: "{{ vm_name }}"

node: proxmox

storage: "{{ storage_location }}"

format: "{{ vm_format }}"

timeout: 500

cores: "{{ vm_cores | default(omit) }}"

vcpus: "{{ vm_vcpus | default(omit) }}"

net:

net0: "{{ vm_net0 | default(omit) }}"

ipconfig:

ipconfig0: "{{ vm_ipconfig0 | default(omit) }}"

register: vm_provision_info

- name: Pause after provision to give the API a chance to catch up

ansible.builtin.pause:

seconds: 10 |

Here you can see my first two tasks. I’m looking for the vm_action variable to be set to provision, and if it is I will run this block of code. I’ll then clone a template. Here I’m doing a full clone, but I could also set it to be a linked clone if I wanted. You can also see I have some customization variables here, that when not set are omitted. After the VM provisions, it will pause for about 10 seconds to give the system time to register the new VM before it proceeds on.

This is also where you see the anchor being referenced. The “<<: *proxmox_auth” is the alias with override. This will take the variable chunk from above and inject it into this module(which makes modification to it easier and saves several lines of code in each task).

1 2 3 4 5 6 7 | - name: Start VM

when: (vm_action == "provision" and vm_provision_info.changed) or vm_action == "start"

community.general.proxmox_kvm:

<<: *proxmox_auth

name: "{{ vm_name }}"

node: proxmox

state: started |

You can see in this start task that I have two conditionals. One if the vm_action is set to start and one if the action is set to provision and it actually made a change when doing the provision. It does this on the provision task because by default when you clone a template it won’t be started. We also check for the changed status because the clone operation is idempotent, which means if that VM already exists, it won’t do anything, it will simply report back “ok”.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | - name: Stop VM

when: vm_action == "stop"

community.general.proxmox_kvm:

<<: *proxmox_auth

name: "{{ vm_name }}"

node: proxmox

state: stopped

- name: Delete VM block

when: vm_action == "delete"

block:

- name: Stop VM with force

community.general.proxmox_kvm:

<<: *proxmox_auth

name: "{{ vm_name }}"

node: proxmox

state: stopped

force: true

- name: Pause to allow shutdown to complete

ansible.builtin.pause:

seconds: 10

- name: Delete VM

community.general.proxmox_kvm:

<<: *proxmox_auth

name: "{{ vm_name }}"

node: proxmox

state: absent |

The delete block isn’t too complex. It first stops the VM, pauses so the system will register the change, then performs the delete from disk.

VMware Playbook(vmware-vm.yml)

Compare and contrast the two playbooks: they are laid out almost identically, the tasks are in the same order, and they are configured almost the same. This means transitioning from one to the other should be pretty seamless.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 | ---

- name: Create and control VMs on VMware using Ascender

hosts: localhost

gather_facts: false

vars:

# below are all of the details for the VM. I'm overriding these at runtime.

vm_datacenter: MNS

vm_name: snowtest2

# vm_template: Windows2016

vm_template: Rocky8.6

# vm_template: Rocky8

# vm_template: Rocky9

vm_folder: /Greg/ciq

vm_disksize: 50

vm_datastore: SSD

# minimum of 4GB of RAM

vm_memory: 4096

vm_cpus: 4

vm_netname: Greg

vm_ip: 10.1.12.56

vm_netmask: 255.255.255.0

vm_gateway: 10.1.12.1

vmware_auth: &vmware_auth

hostname: "{{ vcenter_hostname }}"

username: "{{ gen1_user }}"

password: "{{ gen1_pword }}"

validate_certs: no

# Switches for playbook control

# vm_action: provision

# vm_action: start

# vm_action: stop

# vm_action: delete

tasks:

- name: Provision a VM

when: vm_action == "provision"

community.vmware.vmware_guest:

<<: *vmware_auth

folder: "{{ vm_folder }}"

name: "{{ vm_name }}"

datacenter: "{{ vm_datacenter }}"

state: poweredon

# guest_id: centos64Guest

template: "{{ vm_template }}"

# This is hostname of particular ESXi server on which user wants VM to be deployed

disk:

- size_gb: "{{ vm_disksize }}"

type: thin

datastore: "{{ vm_datastore }}"

hardware:

memory_mb: "{{ vm_memory }}"

num_cpus: "{{ vm_cpus}}"

scsi: paravirtual

networks:

- name: "{{ vm_netname}}"

connected: true

start_connected: true

type: dhcp

# type: static

# ip: "{{ vm_ip }}"

# netmask: "{{ vm_netmask }}"

# gateway: "{{ vm_gateway }}"

# dns_servers: "{{ vm_gateway }}"

# wait_for_ip_address: true

# device_type: vmxnet3

register: deploy_vm

- name: Start a VM

when: vm_action == "start"

community.vmware.vmware_guest:

<<: *vmware_auth

folder: "{{ vm_folder }}"

name: "{{ vm_name }}"

datacenter: "{{ vm_datacenter }}"

state: poweredon

- name: Stop a VM

when: vm_action == "stop"

community.vmware.vmware_guest:

<<: *vmware_auth

folder: "{{ vm_folder }}"

name: "{{ vm_name }}"

datacenter: "{{ vm_datacenter }}"

# state: poweredoff

state: shutdownguest

- name: Delete a VM

when: vm_action == "delete"

community.vmware.vmware_guest:

<<: *vmware_auth

folder: "{{ vm_folder }}"

name: "{{ vm_name }}"

datacenter: "{{ vm_datacenter }}"

state: absent

force: true |

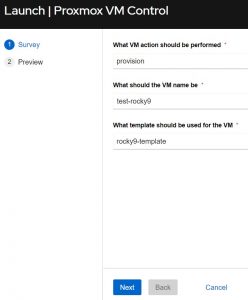

Interface Comparison In Ascender

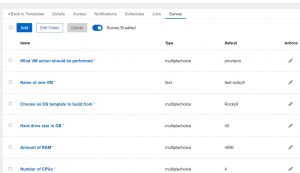

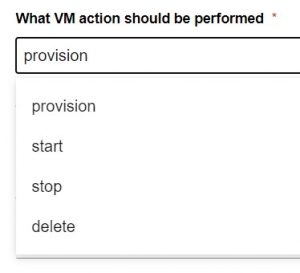

For my job templates(how you pull your playbook and required components together in Ascender) I’m using something called a survey. This allows you to quickly/easily configure a set of questions for the user to answer before the automation is run. Here’s an example of my VMware survey:

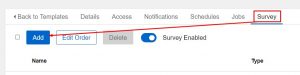

Adding an entry is as simple as clicking add and filling in the blanks:

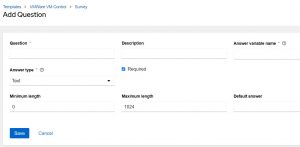

Notice in the above there is a blank space for “Answer variable name”. This is the extra_vars variable that the info will be passed to the playbook with. That’s how these surveys work; they simply pass info to the playbook as an extra_var.

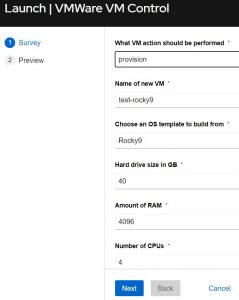

So for the sake of comparison, here’s what it looks like when you launch the two different job templates:

VMware Job Template:

Notice how they are almost identical. I added all of the options to the VMware JT, but I kept the Proxmox one a little cleaner. I could have just as easily added all of the knobs, but I wanted to show how you can make them full featured or simple. In the end they accomplish the same task. You put in the VM’s name, choose an action to perform, and add any additional data required. That’s literally all there is to it!

When I say choose an action it’s as easy as this:

Conclusion

Now this should just serve to show you how easy it is to automate different hypervisors. Keep in mind that this example is simple, but can easily be expanded to easily automate virtually all of the functions of your virtual environment.

As always, I’m looking for feedback. How would you use this in your environment…how would you tweak or tune this?

CIQ also does professional services, so if you need help building, configuring, or migrating your automation strategy, environments, or systems, please reach out!

Thanks and happy automating!

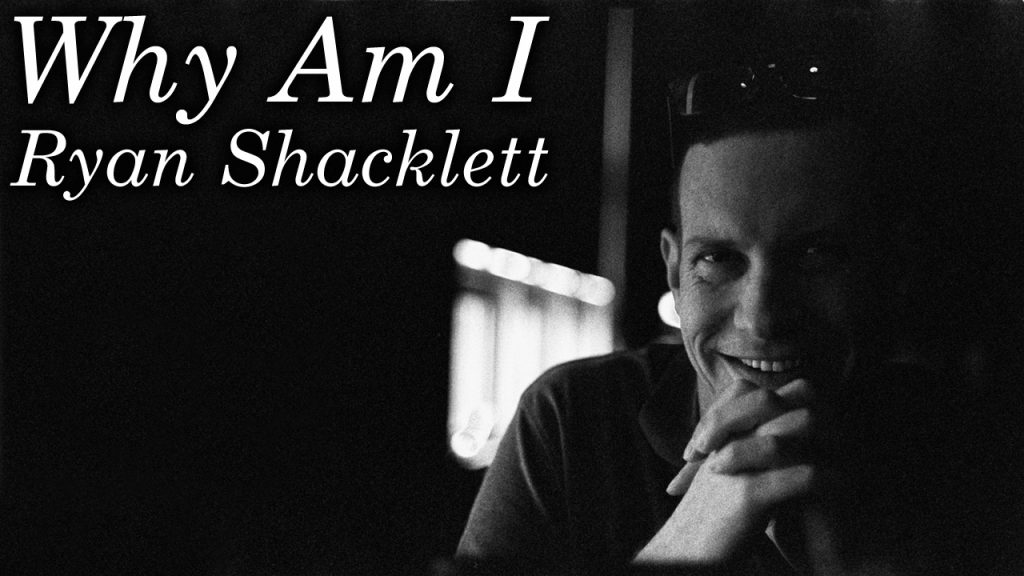

Hey everybody, I’m Greg Sowell and this is Why Am I, a podcast where I talk to interesting people and try to trace a path to where they find themselves today. My guest this go around is Ryan Shacklett. Ryan has multiple fursonas with his main as Wild Acai. For the uninitiated, that means he attends conventions and events dressed in an insanely impressive animal suite…often referred to as a “furry”. Not only does it provide a place to explore parts of your personality, but also allows for a lot of creative expression. Not only does he participate, but he also gets to give these experiences to others through his own multi employee company that creates these impressive suites.. Help us grow by sharing with someone!

Please show them some love on their socials here: http://waggerycos.com/,

https://twitter.com/waggerycos.

If you want to support the podcast you can do so via https://www.patreon.com/whyamipod (this gives you access to bonus content including their Fantasy Restaurant!)

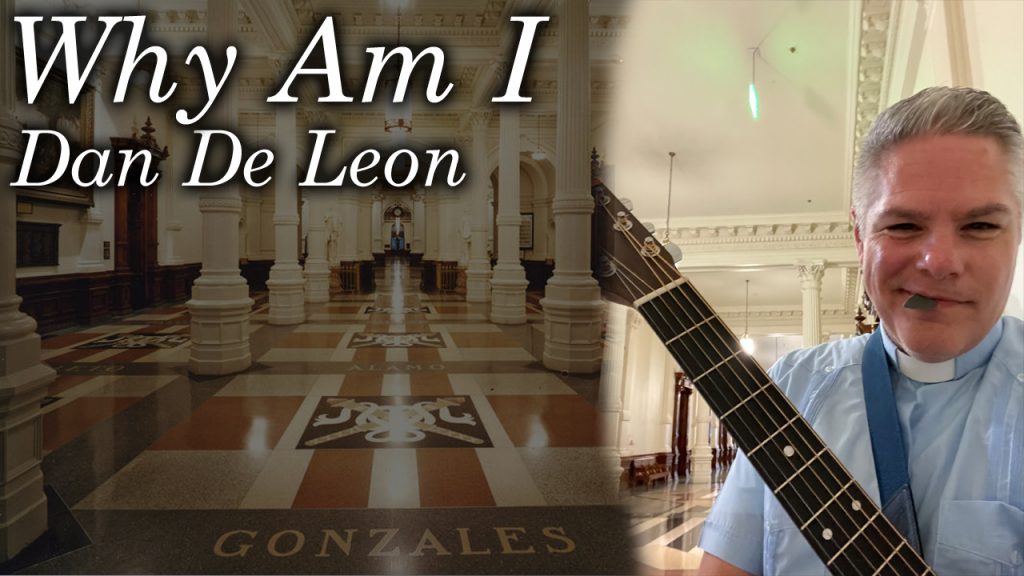

Hey everybody, I’m Greg Sowell and this is Why Am I, a podcast where I talk to interesting people and try to trace a path to where they find themselves today. My guest this go around is Dan De Leon. Dan lives his life the way he leads his church, open and affirming. That was a term new to me, but essentially it means all people are welcome and supported…no matter how able bodied you are, or where you are in the LGBTQIA+ box of crayons. Dan gives me hope. Hope that the religious people I care about can come to the one real truth; loving others unconditionally is the only thing that matters. You follow this principle first, and have your faith fit in around that, not the other way around. I learn a LOT in this conversation, and I hope you do to. Please enjoy this chat with Dan. Help us grow by sharing with someone!

Please show them some love on their socials here: https://www.friends-ucc.org/,

https://www.facebook.com/friendschurchucc,

https://www.instagram.com/friends_ucc.

If you want to support the podcast you can do so via https://www.patreon.com/whyamipod (this gives you access to bonus content including their Fantasy Restaurant!)

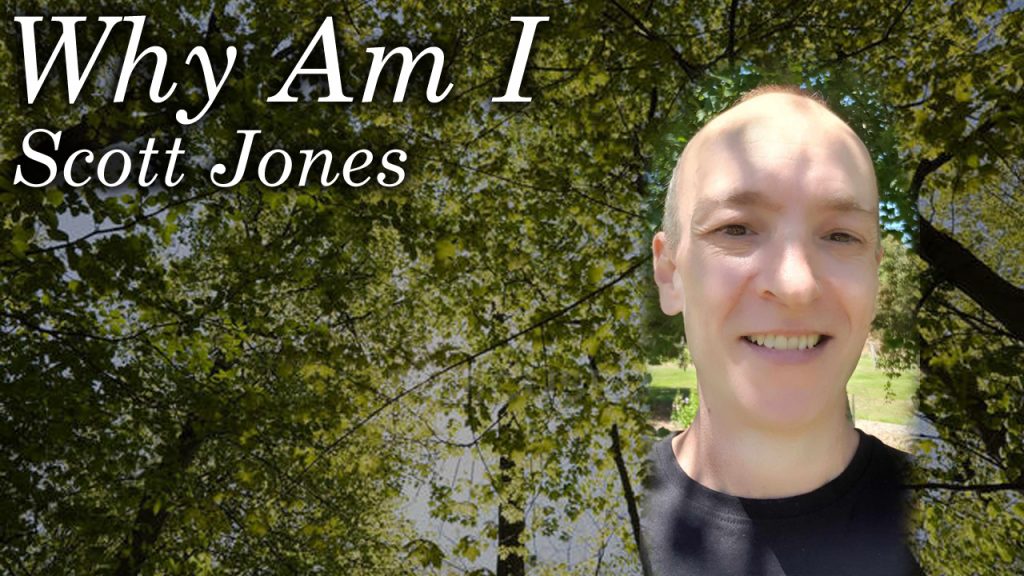

Hey everybody, I’m Greg Sowell and this is Why Am I, a podcast where I talk to interesting people and try to trace a path to where they find themselves today. My guest this go around is Scott Jones. He’s a British born Aussie who has always felt a bit like a fish out of water. Part of that feeling came to light when he recently realized he’s gay, and now the world literally looks different. I mean imagine waking up one day, and getting to experience the world through a beautiful new lens. I hope you enjoy this chat with Scott. Help us grow by sharing with someone!

If you want to support the podcast you can do so via https://www.patreon.com/whyamipod (this gives you access to bonus content including their Fantasy Restaurant!)