Welcome to the warmup exercise for the Why Am I podcast called “the Fantasy Restaurant.” In here my guests get to pick their favorite: drink, appetizer, main, sides, and dessert…anything goes. This is another vegetarian meal, but it is packed with flavors and textures…I think maybe enough to satiate any palette. Word of warning, I was on the tail end of a bad cold, so there are some random pauses for me to cough, and I likely sound a little rough! I hope you enjoy this meal with Kayleigh.Help us grow by sharing with someone!

Please show them some love on their socials here: https://www.instagram.com/mermaid.kayleigh/, https://linktr.ee/mermaid.kayleigh.

When using inventories in Ascender and AWX it’s often advantageous to take advantage of dynamic inventories. This really just means a way to query a Configuration Management Database(CMDB) for a list of hosts and their variables. There are many ways baked into Ascender to do this with services like Amazon, Google, or VMWare, but what about when it’s a CMDB that’s not on that list; that’s where custom dynamic inventory scripts come in! They allow you to query any system or digest any file to build an inventory dynamically.

Video

Scripts

You can find my scripts here in my git repository.

First is my sample JSON data file[json-payload]:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 | {

"hosts": [

{

"id": 1,

"hostname": "host1.example.com",

"ip_address": "192.168.1.101",

"status": "active",

"os": "Linux",

"owner": "Admin User"

},

{

"id": 2,

"hostname": "host2.example.com",

"ip_address": "192.168.1.102",

"status": "inactive",

"os": "Windows",

"owner": "User 1"

},

{

"id": 3,

"hostname": "host3.example.com",

"ip_address": "192.168.1.103",

"status": "active",

"os": "Linux",

"owner": "User 2"

},

{

"id": 4,

"hostname": "host4.example.com",

"ip_address": "192.168.1.104",

"status": "active",

"os": "Windows",

"owner": "User 3"

},

{

"id": 5,

"hostname": "host5.example.com",

"ip_address": "192.168.1.105",

"status": "inactive",

"os": "Linux",

"owner": "User 4"

}

]

} |

As you can see, this is a simple set of JSON data that could be returned via my CMDB. Remember, this can be live querying or consumed via file. This list is five different hosts with a mix of variables.

Now for the custom dynamic inventory script [custom_inventory.py].

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 | #!/usr/bin/env python

#test import script

import json

# Specify the path to the JSON payload file

json_payload_file = 'json-payload'

# Read the JSON payload from the file

with open(json_payload_file, 'r') as file:

json_payload = file.read()

# Parse the JSON payload

inventory_data = json.loads(json_payload)

# Initialize inventory data structures

ansible_inventory = {

'_meta': {

'hostvars': {}

},

'all': {

'hosts': [],

'vars': {

# You can define global variables here

}

}

}

# Initialize group dictionaries for each OS

os_groups = {}

# Process each host in the JSON payload

for host in inventory_data['hosts']:

host_id = host['id']

hostname = host['hostname']

ip_address = host['ip_address']

status = host['status']

os = host['os']

owner = host['owner']

# Add the host to the 'all' group

ansible_inventory['all']['hosts'].append(hostname)

# Create host-specific variables

host_vars = {

'ansible_host': ip_address,

'status': status,

'os': os,

'owner': owner

# Add more variables as needed

}

# Add the host variables to the '_meta' dictionary

ansible_inventory['_meta']['hostvars'][hostname] = host_vars

# Add the host to the corresponding OS group

if os not in os_groups:

os_groups[os] = {

'hosts': []

}

os_groups[os]['hosts'].append(hostname)

# Add the OS groups to the inventory

ansible_inventory.update(os_groups)

# Print the inventory in JSON format

print(json.dumps(ansible_inventory, indent=4)) |

Note: make sure to make the file executable before uploading to your git repository or you will kick a permissions error when running the script on Ascender.

The whole first half of the script is pretty standard across all scripts. It begins to vary when I setup the variable for dynamic group mapping

1 2 | # Initialize group dictionaries for each OS

os_groups = {} |

This isn’t a requirement, but is certainly a big value add. I’m going to be grouping the hosts based on OS type, so I create the os_groups variable to store that info.

1 2 3 4 5 6 7 8 9 10 11 | # Process each host in the JSON payload

for host in inventory_data['hosts']:

host_id = host['id']

hostname = host['hostname']

ip_address = host['ip_address']

status = host['status']

os = host['os']

owner = host['owner']

# Add the host to the 'all' group

ansible_inventory['all']['hosts'].append(hostname) |

This section processes the info from the JSON payload and maps it to some standard variables for later use. I last add each host to the “all” inventory group.

1 2 3 4 5 6 7 8 9 10 11 | # Create host-specific variables

host_vars = {

'ansible_host': ip_address,

'status': status,

'os': os,

'owner': owner

# Add more variables as needed

}

# Add the host variables to the '_meta' dictionary

ansible_inventory['_meta']['hostvars'][hostname] = host_vars |

Here I’m creating the host_vars section(all of the variables saved for each host in the inventory).

1 2 3 4 5 6 7 8 9 | # Add the host to the corresponding OS group

if os not in os_groups:

os_groups[os] = {

'hosts': []

}

os_groups[os]['hosts'].append(hostname)

# Add the OS groups to the inventory

ansible_inventory.update(os_groups) |

Last I’m creating the os groups and then adding each host to its corresponding group.

Ascender Configuration

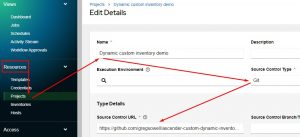

The Ascender config is pretty straightforward. Start by adding a project in pointing to the script repository:

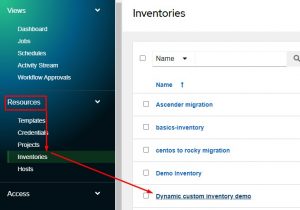

Now I attach the script to an inventory. I first either create or enter an inventory:

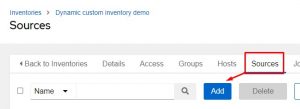

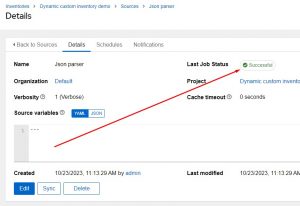

Once in the inventory I click the “Sources” tab and click “Add”:

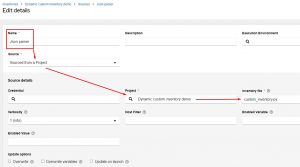

From here, give the source a name, choose “Sourced from a Project” under Source, select out newly created project, and last choose the new inventory script:

Some notes in this section. At the bottom there are some checkbox options.

Overwrite will wipe everything(hosts, groups, variables) and replace it with what is returned from the script.

Overwrite variables will wipe all variables and replace them with script return data.

Update on launch will force this script to rerun each time the inventory is used(this is often a desired option).

![]()

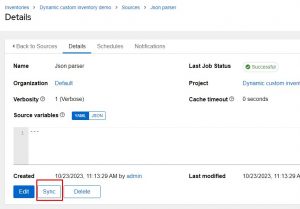

To manually synchronize, simply click the “Sync” button.

To view status or debug what is happening or happened in your sync, click Last Job Status:

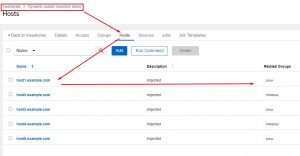

You can checkout the results of the sync by going to the host section in your inventory:

Conclusion

The dynamic inventory sources are a powerful way to extend the usefulness of Ascender. Dynamic is the name of the game, as it keeps your automations as flexible as possible.

If you have any questions or concerns, if there are any tweaks or tunes you’d make, please reach out, I’d love to hear from you.

Thanks and happy automating!

Please show them some love on their socials here: https://www.instagram.com/mermaid.kayleigh/, https://linktr.ee/mermaid.kayleigh.

Please show them some love on their socials here: https://www.instagram.com/steadfastcounseling/, https://steadfastcounseling.com/about-me/, https://www.tiktok.com/@theeverytherapist, https://www.linkedin.com/in/lauren-auer-m-a-lcpc-4633a872/.

Workflows allow AAP, AWX, and Ascender to visually connect playbooks together. This works great…until you try and pass variables from one job template(playbook and required parts in Ascender) to another. That’s where the set_stats module comes in. It has the ability to pass between workflow jobs, but the documentation doesn’t fully explain how it works, and some much needed functionality doesn’t exist…so this blog post will show you how to work around these limitations!

Video

set_fact Vs set_stats

set_fact

If you are to the point in your automation journey where you are playing with workflows I’m going to go out on a limb and say you’ve already used the set_fact module. In short, it allows you to create and/or set the contents of a variable. Here’s an example:

1 2 3 | - name: What am I having for lunch?

ansible.builtin.set_fact:

lunch: tacos |

This works a treat unless you want to pass this variable to another job template in a workflow…these variables are scoped to the individual job template only, but there is a workaround.

set_stats

The set_stats module looks pretty similar to set_fact…so for example:

1 2 3 4 | - name: What am I having for lunch?

ansible.builtin.set_stats:

data:

lunch: tacos |

The only real difference I see here beyond a different module name, is an option called “data” and then my new variables. So it should feel fairly familiar. One of its key features is that set_stats variables(depending on how I create them) can be passed to another job template in a workflow! Its operation, however, is quite different.

Functional Differences

By default set_facts will instantiate a “lunch” variable(lunch as in the variable name from the above example) for each host, and each host can have different contents of the “lunch” variable.

As for set_stats, by default it does not do per host and the information is aggregated. This means if I have 10 hosts, there is only 1 set_stats, and when I set new information in the variable it will just start smashing it all together.

Playbooks And Examples

You can find my playbooks here in my ansbile-misc repository.

First is the playbook doing collection(set-stats-test.yml) and I’ve got a second playbook that displays all of the information(set-stats-test2.yml). They are in two playbooks so that I can build a workflow. I’m only going to cover the collection playbook, though.

I’ll show one or two tasks and then results from the second playbook to give you an idea of what each does.

Per Host Stats

1 2 3 4 5 6 7 8 9 10 11 12 | - name: per_host true and aggregate true

ansible.builtin.set_stats:

data:

dist_ver_per_host_true_agg_true: "{{ ansible_facts.distribution_version }}"

per_host: true

- name: per_host true and aggregate false

ansible.builtin.set_stats:

data:

dist_ver_per_host_true_agg_false: "{{ ansible_facts.distribution_version }}"

per_host: true

aggregate: false |

These two tasks both set the per_host option to true, but one has aggregation enabled. The results in the second job template for these variables are:

1 2 | "dist_ver_per_host_true_agg_true = not set",

"dist_ver_per_host_true_agg_false = not set", |

As you can see, neither option actually made it to the second job template…odd, huh? This is because workflows don’t pass per host stats. The stats info is passed as extra vars to the rest of the playbooks, which is why it won’t do per host information.

Not Per Host

1 2 3 4 5 6 7 8 9 10 11 12 | - name: per_host false and aggregate true- default behavior

ansible.builtin.set_stats:

data:

dist_ver_per_host_false_agg_true: "{{ ansible_facts.distribution_version }}"

per_host: false

- name: per_host false and aggregate false

ansible.builtin.set_stats:

data:

dist_ver_per_host_false_agg_false: "{{ ansible_facts.distribution_version }}"

per_host: false

aggregate: false |

These two tasks don’t do per host, so their info should be passed. One is using aggregation and one is not. By default set_stats has per_host false and aggregate: true. Here are the results:

1 2 | "dist_ver_per_host_false_agg_true = 8.68.8",

"dist_ver_per_host_false_agg_false = 8.8" |

So the two hosts I’m running this against return different info; one passes back 8.6 and the other passes 8.8.

As you can see the default action will just start smashing together all of the returned information, which, generally, isn’t that helpful, unless you are really just passing over simple information.

The second task with aggregation turned off simply returns the value of the very last host processed…which also isn’t always that useful in certain situations.

Per Host Stats Workaround

There is a workaround for passing per host information!

1 2 3 4 5 6 7 8 9 10 11 | - name: per host collection and aggregate false via a workaround

ansible.builtin.set_stats:

data:

dist_ver_per_host_agg_false: "{{ dist_ver_per_host_agg_false | default({}) | combine({ inventory_hostname : { 'ver' : ansible_facts.distribution_version}}) }}"

aggregate: false

- name: per host collection and aggregate true via a workaround

ansible.builtin.set_stats:

data:

dist_ver_per_host_agg_true: "{{ dist_ver_per_host_agg_true | default({}) | combine({ inventory_hostname : { 'ver' : ansible_facts.distribution_version}}) }}"

aggregate: true |

Looking at both tasks, there is a lot going on in a single line…let’s break it down:

1 | dist_ver_per_host_agg_false: "{{ dist_ver_per_host_agg_false | default({}) | combine({ inventory_hostname : { 'ver' : ansible_facts.distribution_version}}) }}" |

This is actually one long line(even if there is word wrap on it).

It takes the existing variable and builds on it.

default({}) sets the variable to empty if it doesn’t exist(it’s really a way around causing an error).

combine joins two dictionaries together(which is what we are creating). This one builds the variable out with the additional information.

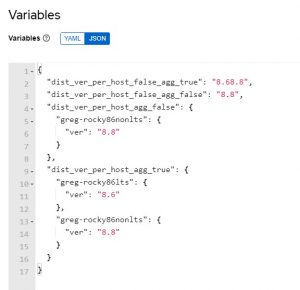

Here are the results when run:

First task

1 2 3 | "dist_ver_per_host_agg_false": {

"greg-rocky86nonlts": {

"ver": "8.8" |

Second task

1 2 3 4 5 6 7 | "dist_ver_per_host_agg_true": {

"greg-rocky86lts": {

"ver": "8.6"

},

"greg-rocky86nonlts": {

"ver": "8.8"

} |

You can see the first task has aggregate turned off, so it only shows whatever host was processed last…which means it’s not really per host!

The second task returns information for all of my hosts! This means that I can collect and pass per host information from one job template to another in a playbook!

Just to bring it all home, here’s the variables section for the second job template(shows variables passed as “extra vars”):

Conclusion

While it’s not always necessary, it is beyond useful to pass per host information in your workflows, and how you have a solid method to do so! If you have any tweaks or tunes you’d make to this, please let me know.

Good luck, and happy automating!

Please show them some love on their socials here: https://www.linkedin.com/in/jeffkellum/.

Inspired by one of my favorite podcasts: https://www.offmenupodcast.co.uk/

Please show them some love on their socials here: https://www.linkedin.com/in/jeffkellum/.